OmniConsistency—新加坡国立大学推出图像风格迁移新模型

时间:2025-05-31 17:54:18 501浏览 收藏

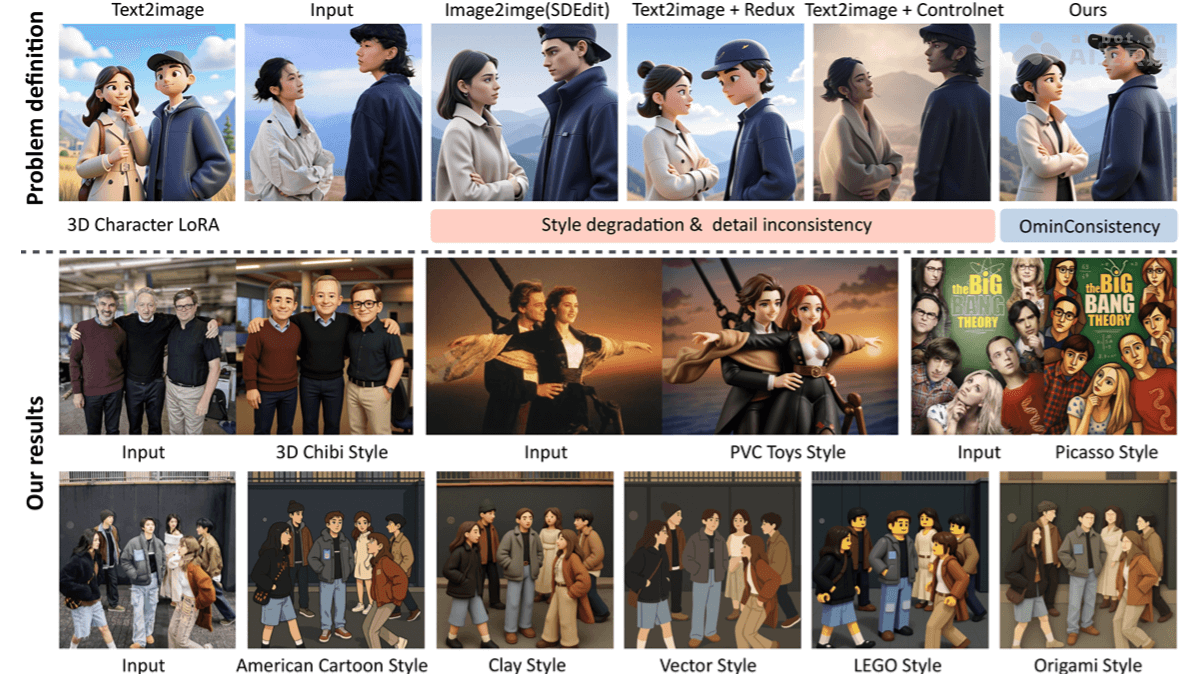

OmniConsistency 是新加坡国立大学开发的一款图像风格迁移模型,专注于解决复杂场景中风格化图像的一致性问题。该模型采用两阶段训练策略,将风格学习与一致性学习分离,确保在不同风格下保持语义、结构和细节的一致性。OmniConsistency 能够与任何特定风格的 LoRA 模块无缝集成,提供高效且灵活的风格化效果。在实验中,其性能与 GPT-4o 相当,并展示出更高的灵活性和泛化能力。

OmniConsistency Explained

OmniConsistency is an image style transfer model developed by the National University of Singapore. It addresses the issue of consistency in stylized images across complex scenes. The model is trained on large-scale paired stylized data using a two-stage training strategy that decouples style learning from consistency learning. This ensures semantic, structural, and detail consistency across various styles. OmniConsistency supports seamless integration with any style-specific LoRA module for efficient and flexible stylization effects. In experiments, it demonstrates performance comparable to GPT-4o, offering higher flexibility and generalization capabilities.

Key Features of OmniConsistency

Key Features of OmniConsistency

- Style Consistency: Maintains style consistency across multiple styles without style degradation.

- Content Consistency: Preserves the original image's semantics and details during stylization, ensuring content integrity.

- Style Agnosticism: Seamlessly integrates with any style-specific LoRA (Low-Rank Adaptation) modules, supporting diverse stylization tasks.

- Flexibility: Offers flexible layout control without relying on traditional geometric constraints like edge maps or sketches.

Technical Underpinnings of OmniConsistency

- Two-Stage Training Strategy: Stage one focuses on independent training of multiple style-specific LoRA modules to capture unique details of each style. Stage two trains a consistency module on paired data, dynamically switching between different style LoRA modules to ensure focus on structural and semantic consistency while avoiding absorption of specific style features.

- Consistency LoRA Module: Introduces low-rank adaptation (LoRA) modules within conditional branches, adjusting only the conditional branch without interfering with the main network's stylization ability. Uses causal attention mechanisms to ensure conditional tokens interact internally while keeping the main branch (noise and text tokens) clean for causal modeling.

- Condition Token Mapping (CTM): Guides high-resolution generation using low-resolution condition images, ensuring spatial alignment through mapping mechanisms, reducing memory and computational overhead.

- Feature Reuse: Caches intermediate features of conditional tokens during diffusion processes to avoid redundant calculations, enhancing inference efficiency.

- Data-Driven Consistency Learning: Constructs a high-quality paired dataset containing 2,600 pairs across 22 different styles, learning semantic and structural consistency mappings via data-driven approaches.

Project Links for OmniConsistency

- GitHub Repository: http://github.com/showlab/OmniConsistency

- HuggingFace Model Library: http://huggingface.co/showlab/OmniConsistency

- arXiv Technical Paper: http://arxiv.org/pdf/2505.18445

- Online Demo Experience: http://huggingface.co/spaces/yiren98/OmniConsistency

Practical Applications of OmniConsistency

- Art Creation: Applies various art styles such as anime, oil painting, and sketches to images, aiding artists in quickly generating stylized works.

- Content Generation: Rapidly generates images adhering to specific styles for content creation, enhancing diversity and appeal.

- Advertising Design: Creates visually appealing and brand-consistent images for advertisements and marketing materials.

- Game Development: Quickly produces stylized characters and environments for games, improving development efficiency.

- Virtual Reality (VR) and Augmented Reality (AR): Generates stylized virtual elements to enhance user experiences.

[Note: All images remain in their original format.]

今天关于《OmniConsistency—新加坡国立大学推出图像风格迁移新模型》的内容介绍就到此结束,如果有什么疑问或者建议,可以在golang学习网公众号下多多回复交流;文中若有不正之处,也希望回复留言以告知!

-

501 收藏

-

501 收藏

-

501 收藏

-

501 收藏

-

501 收藏

-

167 收藏

-

430 收藏

-

343 收藏

-

235 收藏

-

301 收藏

-

304 收藏

-

443 收藏

-

348 收藏

-

491 收藏

-

194 收藏

-

295 收藏

-

186 收藏

-

- 前端进阶之JavaScript设计模式

- 设计模式是开发人员在软件开发过程中面临一般问题时的解决方案,代表了最佳的实践。本课程的主打内容包括JS常见设计模式以及具体应用场景,打造一站式知识长龙服务,适合有JS基础的同学学习。

- 立即学习 543次学习

-

- GO语言核心编程课程

- 本课程采用真实案例,全面具体可落地,从理论到实践,一步一步将GO核心编程技术、编程思想、底层实现融会贯通,使学习者贴近时代脉搏,做IT互联网时代的弄潮儿。

- 立即学习 516次学习

-

- 简单聊聊mysql8与网络通信

- 如有问题加微信:Le-studyg;在课程中,我们将首先介绍MySQL8的新特性,包括性能优化、安全增强、新数据类型等,帮助学生快速熟悉MySQL8的最新功能。接着,我们将深入解析MySQL的网络通信机制,包括协议、连接管理、数据传输等,让

- 立即学习 500次学习

-

- JavaScript正则表达式基础与实战

- 在任何一门编程语言中,正则表达式,都是一项重要的知识,它提供了高效的字符串匹配与捕获机制,可以极大的简化程序设计。

- 立即学习 487次学习

-

- 从零制作响应式网站—Grid布局

- 本系列教程将展示从零制作一个假想的网络科技公司官网,分为导航,轮播,关于我们,成功案例,服务流程,团队介绍,数据部分,公司动态,底部信息等内容区块。网站整体采用CSSGrid布局,支持响应式,有流畅过渡和展现动画。

- 立即学习 485次学习